Making 3D web apps with Blender and Three.js

It is well known that Blender is the most popular open-source 3D modeling suite. On the other hand, Three.js is the most popular WebGL library. Both have millions of users on their own, yet rarely used in combo for making interactive 3D visualizations that work on the Web. This is because such projects require very different sets of skills to cooperate.

Here we are discussing ways to overcome these difficulties. So, how can we make fancy 3D web interactives based on Blender scenes? Basically, you got two options:

- Use the glTF exporter, that comes with Blender, then code a Three.js application to load the scene into it.

- Use a framework to provide that integration without coding, such as the upgraded version of Three.js called Verge3D.

This tutorial mostly covers the vanilla Three.js approach. In the end, some info about Verge3D will be provided as well.

Approach #1: Vanilla Three.js

Intro

We suppose you already have Blender installed. If not, you can get from here and just run the installer.

With Three.js however, it's not that easy! The official Three.js installation guide recommends using the NPM package manager, as well as the JS module bundling tool called webpack. Also, you're gonna need to run a local web server to test your apps. You will have to use the command-line interpreter to operate all these. This tool chain looks familiar to seasoned web developers. Still, using it with Three.js can turn out to be quite non-trivial, especially for people with little or no coding skills (e.g. for Blender artists who might even have some experience in Python scripting).

An easier way is to just copy the static Three.js build into your project folder, add some external dependencies (such as the glTF 2.0 loader), compose a basic HTML page to link all these, and finally run some web server to open the app. Even better — Blender has Python, so you can just launch the standard web server that comes with Python.

This latter approach is exactly what we are discussing in this tutorial. If you don't want to reproduce it step by step for yourself, you can proceed to downloading the complete Three.js-Blender starter project. Ok, let's begin!

Creating the Blender scene

This step seems to be pretty straightforward for a Blender artist, right? Well, you must get familiar with the limitations of the glTF 2.0 format, and WebGL in general! Particularly:

- Models must be low-poly to middle-poly so that the entire scene does not exceed several hundreds of thousands polygons. Usually 100k-500k polys is fine.

- Cameras should be assigned explicitly in your app via JavaScript.

- Lights and world shader nodes are not supported. Again, you'll need to use JavaScript to setup proper lighting.

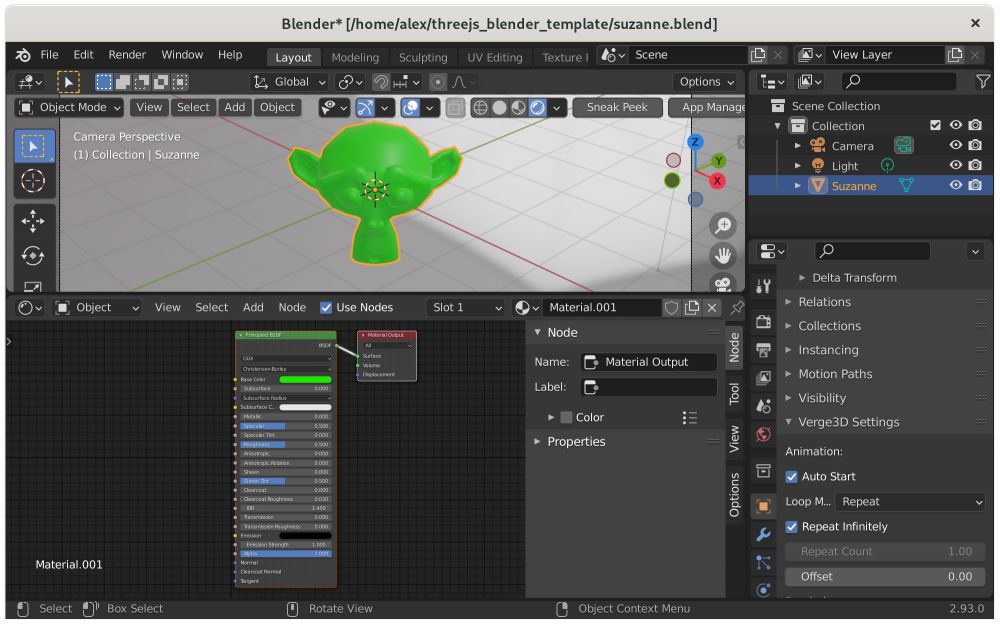

- Materials should be based on a single Principled BSDF node. Refer to the Blender Manual for more info.

- Only limited set of textures is supported, namely Color, Metallic, Roughness, AO, Normal Map, and Emissive.

- Maximum two UV maps are supported. Metallic and Roughness textures should use the same UV map.

- You can adjust the offset, rotation and scale for your textures via a single Mapping node. All other UV modifications are not supported.

- You can only animate a limited set of params: object position, rotation, scale, the influence value for shape keys, and bone transformations. Again, all animations are initialized and controlled with JavaScript.

- And so on. Other things come on the need-to-know basis.

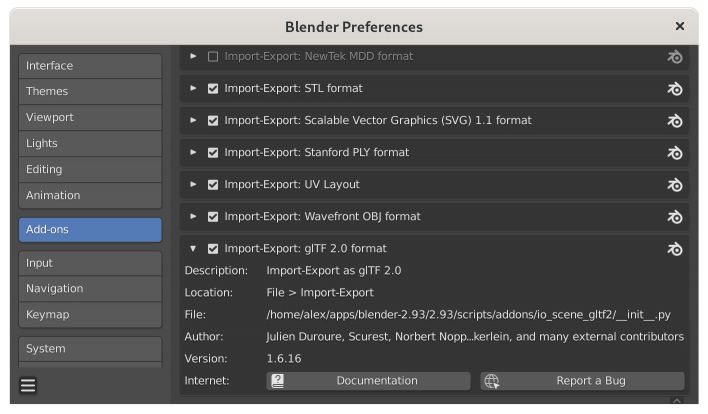

Exporting the scene to glTF 2.0

The procedure is as follows. First, you open Blender preferences, click Add-ons, then make sure that Import-Export: glTF 2.0 format addon is enabled:

After that you can export your scene to glTF via File -> Export -> glTF 2.0 (.glb/.gltf) menu. That's it.

Adding Three.js builds and dependencies

You can download the pre-built version of Three.js from GitHub. Select the latest release, then scroll down to download *.zip(Windows) or tar.gz (macOS, Linux) version. In our first app we're going to use the following files from that archive:

- three.module.js — base Three.js module

- GLTFLoader.js — loader for our glTF files.

- OrbitControls.js — to rotate our camera with mouse/touchscreen.

- RGBELoader.js — to load fancy HDRI map used as environment lighting.

Creating the main HTML file

Create the file index.html with the following content. For simplicity, it includes everything (HTML, CSS, and JavaScript):

<!DOCTYPE html>

<html lang="en">

<head>

<title>Blender-to-Three.js App Template</title>

<meta charset="utf-8">

<meta name="viewport" content="width=device-width, user-scalable=no, minimum-scale=1.0, maximum-scale=1.0">

<style>

body {

margin: 0px;

}

</style>

</head>

<body>

<script type="module">

import * as THREE from './three.module.js';

import { OrbitControls } from './OrbitControls.js';

import { GLTFLoader } from './GLTFLoader.js';

import { RGBELoader } from './RGBELoader.js';

let camera, scene, renderer;

init();

render();

function init() {

const container = document.createElement( 'div' );

document.body.appendChild( container );

camera = new THREE.PerspectiveCamera( 45, window.innerWidth / window.innerHeight, 0.25, 20 );

camera.position.set( - 1.8, 0.6, 2.7 );

scene = new THREE.Scene();

new RGBELoader()

.load( 'environment.hdr', function ( texture ) {

texture.mapping = THREE.EquirectangularReflectionMapping;

scene.background = texture;

scene.environment = texture;

render();

// model

const loader = new GLTFLoader();

loader.load( 'suzanne.gltf', function ( gltf ) {

scene.add( gltf.scene );

render();

} );

} );

renderer = new THREE.WebGLRenderer( { antialias: true } );

renderer.setPixelRatio( window.devicePixelRatio );

renderer.setSize( window.innerWidth, window.innerHeight );

renderer.toneMapping = THREE.ACESFilmicToneMapping;

renderer.toneMappingExposure = 1;

renderer.outputEncoding = THREE.sRGBEncoding;

container.appendChild( renderer.domElement );

const controls = new OrbitControls( camera, renderer.domElement );

controls.addEventListener( 'change', render ); // use if there is no animation loop

controls.minDistance = 2;

controls.maxDistance = 10;

controls.target.set( 0, 0, - 0.2 );

controls.update();

window.addEventListener( 'resize', onWindowResize );

}

function onWindowResize() {

camera.aspect = window.innerWidth / window.innerHeight;

camera.updateProjectionMatrix();

renderer.setSize( window.innerWidth, window.innerHeight );

render();

}

//

function render() {

renderer.render( scene, camera );

}

</script>

</body>

</html>

You can find this file in the starter project on GitHub (linked above). So, what is going on in this code snippet? Well...

- Initializing the canvas, scene and camera, as well as WebGL renderer.

- Creating "orbit" camera controls by using the external OrbitControls.js script.

- Loading an HDR map for image-based lighting.

- Finally, loading the glTF 2.0 model we exported previously.

If you merely open this HTML file with the browser you'll see.... nothing! This is a security measure that is imposed by the browsers. Without a properly configured web server you won't be able to launch this web application. So let's run a server!

Running a web server

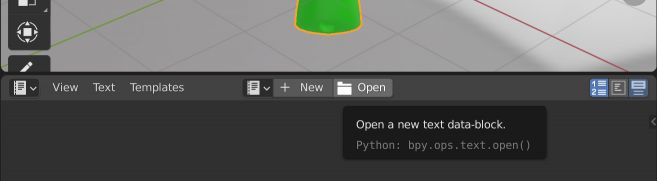

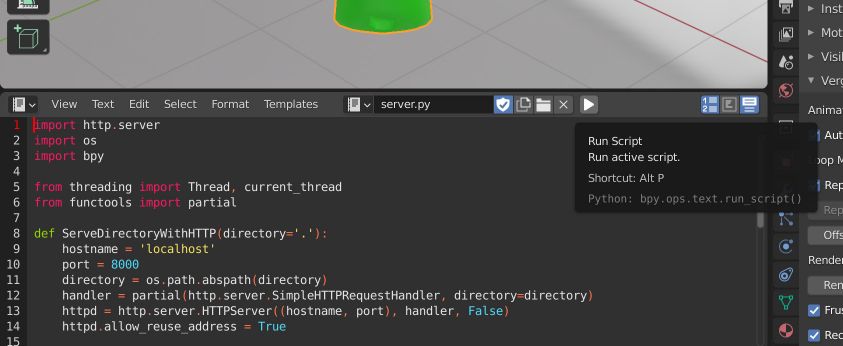

If we have Python in Blender, why not use it? Let's create a basic Python script with the following content (or just copy the file from the starter project):

import http.server

import os

import bpy

from threading import Thread, current_thread

from functools import partial

def ServeDirectoryWithHTTP(directory='.'):

hostname = 'localhost'

port = 8000

directory = os.path.abspath(directory)

handler = partial(http.server.SimpleHTTPRequestHandler, directory=directory)

httpd = http.server.HTTPServer((hostname, port), handler, False)

httpd.allow_reuse_address = True

httpd.server_bind()

httpd.server_activate()

def serve_forever(httpd):

with httpd:

httpd.serve_forever()

thread = Thread(target=serve_forever, args=(httpd, ))

thread.setDaemon(True)

thread.start()

app_root = os.path.dirname(bpy.context.space_data.text.filepath)

ServeDirectoryWithHTTP(app_root)

Save it as server.py. In Blender, switch to the Text Editor area:

Click Open, locate and open that script, then click the 'Play' icon to launch the server:

This server will be running in the background until you close Blender. Cool, yeah?

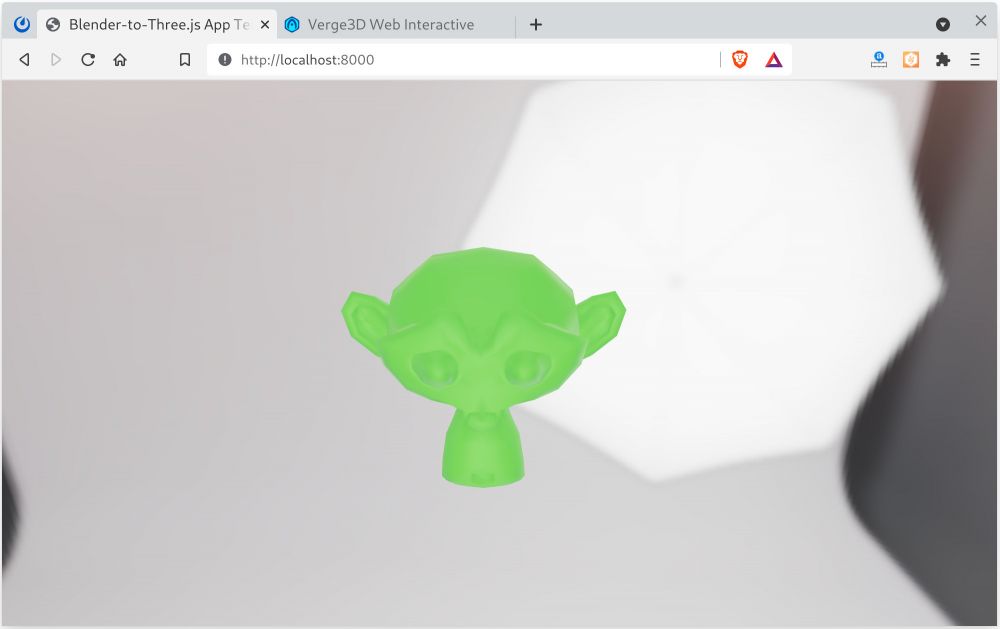

Running the app

Open the following page in your browser http://localhost:8000/. You should see the following page:

Try to rotate or zoom the model. Surely there are some issues with the rendering: namely it does not look exactly as in Blender viewport. There are some things we can do to improve this situation but don't expect much: Three.js is Three.js and Blender is Blender.

Building the pipeline

A typical approach for creating web apps with Three.js and Blender is recommended below:

- Use the starter project to quickly create and deploy your apps. Feel free to upgrade that template - it's all open-source.

- It's much easier to load a single HDR map for environment lighting, than to tweak multiple light sources with JavaScript. And it works faster!

- Blender comes first, Three.js goes second. This means you should try to design as much as possible in Blender. This will save you a great deal of coding.

- Take some time to learn more about glTF 2.0, so that you fully understand the limitations and constraints of this format.

- For anything beyond loading a mere model you can refer to myriad of Three.js examples.

Approach #2: Verge3D

Verge3D is kinda Three.js on steroids. It includes a bunch of tools to sweeten the creation of 3D web content based on Blender scenes. As a result, this toolkit puts artists, instead of programmers, in charge of a project.

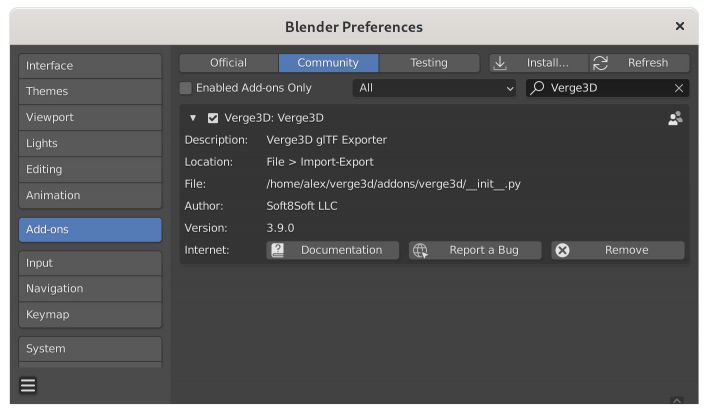

The toolkit costs some money, but you can experiment with a trial version the only limitation of which is that shows a watermark. Installation is fairly simple: get the installer (Windows) or zip archive (macOS, Linux), then enable the Blender add-on that is shipped with Verge3D:

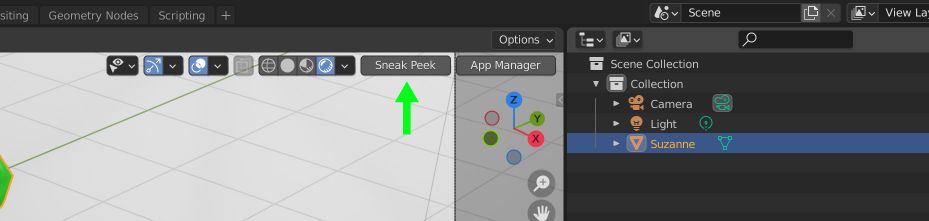

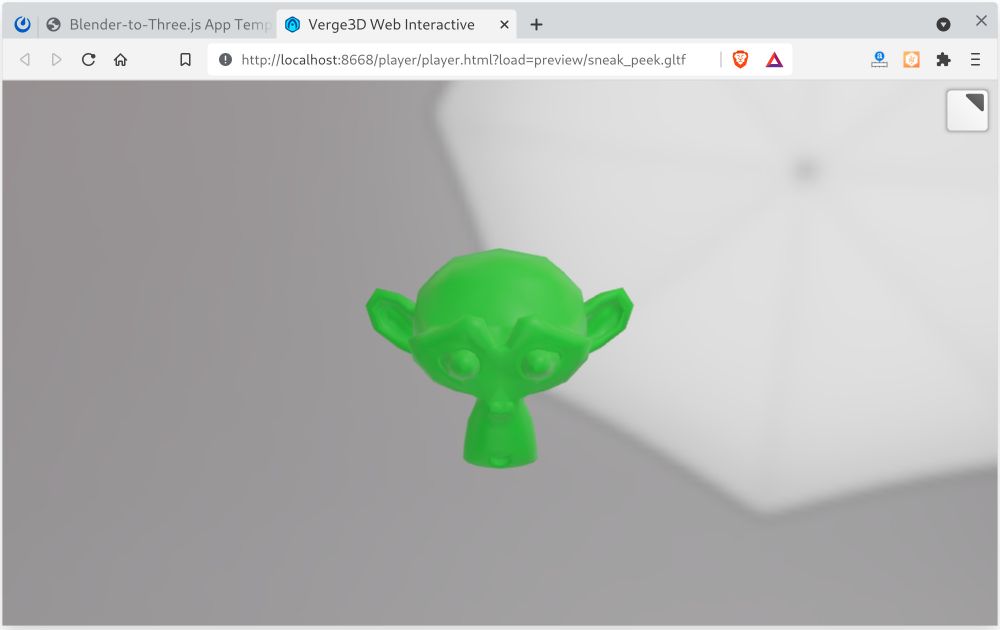

Verge3D comes with a convenient preview feature called Sneak Peek:

This magic button exports the Blender scene in a temporary location and immediately opens it in the web browser:

There is no need to create a project from scratch, write any JavaScript, or care about the web server — Verge3D does this all for you.

The WebGL rendering is usually consistent with Blender viewport (especially if you switch to the real-time renderer Eevee). This is because Verge3D tries to accurately reproduce most Blender features, such as native node-based materials, lighting, shadows, animation, morphing, etc. It works similar to vanilla Three.js, i.e. it first exports the scene to a glTF 2.0 asset, and then loads it in the browser via JavaScript... except you don't need to write any code.

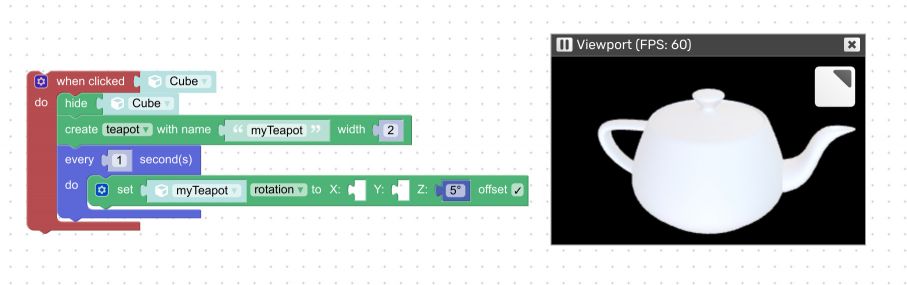

But how can we make the app interactive, if not by JavaScript code? Verge3D does it differently as it comes with a Scratch-like scripting environment called Puzzles. For example, to convert the default Blender cube to a nice spinning Utah teapot you can employ the following "code":

This logic is quite self-explanatory. Under the hood, these blocks are converted to JavaScript that calls Verge3D APIs. You still can write your own JavaScript, or even add new visual blocks as plugins. In any case, Verge3D remains compatible with Three.js, so you can use Three.js snippets, examples, or apps found on the web.

Verge3D comes with many other useful features, including the App Manager, AR/VR integration, WordPress plugin, Cordova/Electron builders, a physics engine, tons of plugins, materials, and asset packs, as well as ready-to-use solutions for e-commerce and e-learning industries. Discussing all these is beyond the scope of this article, so refer to these beginner-level tutorial series to learn more about this toolkit.

Which approach to choose?

Three.js offers a feature that is quite convincing — it's free! Blender is free too, and if you got plenty of time, you are all set! You can make something really big, share it, get some recognition, or sell your work without paying a buck first.

On the other hand, if paying some money for a license is not a big deal for you (or your company), you might consider Verge3D. And this is not only because it's a more powerful variant of Three.js that will help you meet a deadline. Verge3D is designed to be an artist-friendly tool that is worth looking into if you're using Blender in your pipeline.